In the preceding sections, I have explained why classic, text-based filters systematically fail in the fight against the misuse of image-generating AIs: they only see the words of the prompt, not the raw, potentially harmful data fed in as a basis.

Anyone who uses real videos as a basis for digital violation through DeepFakes can exploit this loophole today with almost no risk. Therefore, any serious AI security architecture needs a preliminary protective layer that rigorously monitors the entrance before the actual AI generation even begins.

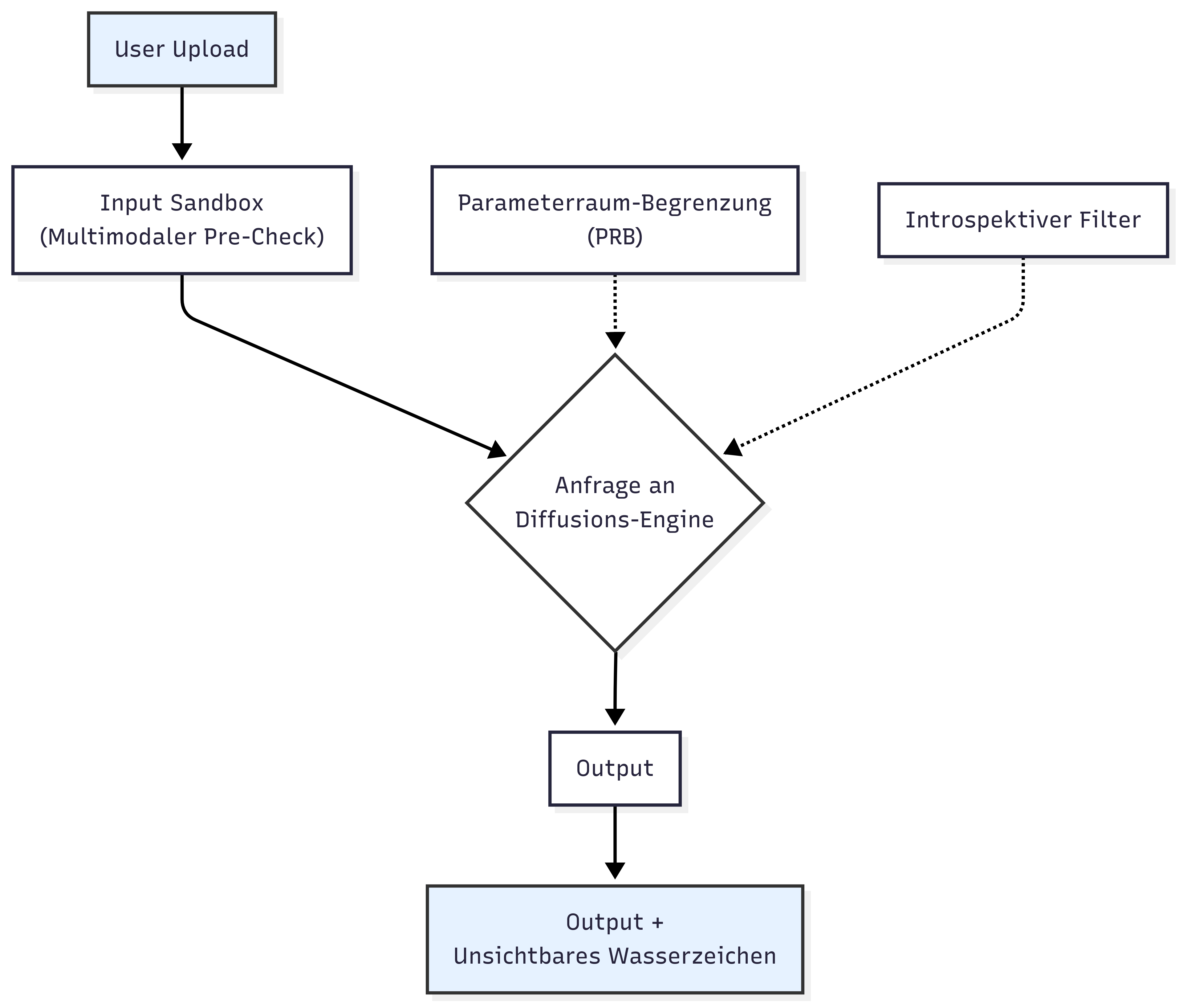

I call this protective layer the Multimodal Pre-Check or, pragmatically: the Input Sandbox.

The Input Sandbox is an isolated, automated inspection area that breaks down any uploaded image or video material into its components, analyzes it, and compares it with forensic checks against known abuse patterns.

It detects nudity, real faces, suspicious layer masks, and known pornographic fragments before the generative AI wastes a single GPU hour. Anyone who uploads clean content will not notice a thing. Anyone trying to trick the system will fail at the door.

The pre-check operates in multiple stages:

| Check Stage | Method | Purpose |

|---|---|---|

| 1. Upload Decomposition | ffmpeg | Extraction of raw data. Videos are broken down frame by frame into individual images to enable granular analysis. |

| 2. Perceptual Hashing | ImageHash | Creation of a unique "fingerprint" for each image/frame and comparison with databases of known pornographic sequences. |

| 3. Face ID Matching | InsightFace / Mediapipe | Precise detection of whether real, identifiable faces are present in the source material that could be misused for a face-swap. |

| 4. Nudity Classification | NudeNet / Equivalents | A specialized classifier assesses whether the upload contains explicit or implied nudity. |

| 5. Context Matching | Internal Logic | The user's planned prompt is compared with the results of the analysis. If there is a contradiction or a high probability of abuse (e.g., nudity + real face), the process is immediately and strictly terminated. |

| 6. Forensic Logging | Secure Protocol | Each check is logged in detail and stored encrypted to provide an unbroken chain of evidence in the event of repeated abuse attempts. |

The Input Sandbox blocks abuse on four crucial levels:

1. Preventive: No diffusion-based faceswap on a pornographic source video is possible without the filter detecting it at the time of upload. The attack is stopped before it begins.

2. Resource-Optimized: No expensive GPU hours are wasted on generating illegal or harmful content. The check is performed quickly and efficiently at the CPU level.

3. Evidence-Capable: Anyone who repeatedly tries leaves forensic traces (hashes, IP addresses, etc.) that can be immediately passed on to takedown services, platform moderators, or, in extreme cases, to authorities.

4. Combinable: The sandbox is not a replacement, but a supplement. It works hand in hand with the other mechanisms I have proposed, such as the introspective filter and parameter-space restriction (PSR).

The technical implementation is not rocket science but can be realized with stable and widely available open-source libraries. The challenge is not technical feasibility, but the will to implement it.

Video Processing: ffmpeg reliably splits videos into frames.

Face & Body Detection: OpenCV and Mediapipe detect faces, contours, and poses.

Face Identification: InsightFace provides precise Face ID matching.

Nudity Classification: Open-source classifiers like NudeNet reliably flag sensitive image content.

Image Hashing: Libraries like ImageHash detect known material even with minor changes.

Isolation: The entire inspection process must run in an isolated environment (Docker, Firecracker VMs) to prevent manipulation.

The pre-check must be placed before the diffusion engine, be API-integrated, and mandatory for every inpainting, outpainting, or face-swap session. No exceptions.

The Input Sandbox is the final bolt on the machine's door. It does not replace the filtering of the thought process, but it makes it operable in the first place by preventing the AI from having to work with dirty tools.

Anyone who cuts corners on the pre-check is not saving on costs, but on dignity. Any manufacturer who advertises AI video editing but does not implement a robust input sandbox is operating an open gateway for digital violation. With this architecture, there are no more excuses—anyone who continues to allow abuse is condoning it.